|

|

|

|

|

|

|

| Style transfer is a recent field in the development of deep neural networks, which allows for the style from one image to be transferred onto another image. This has been well- researched for 2D images, but transferring style onto 3D reconstructed content can still be further developed. We achieve this by using Implicit Differentiable Renderer (IDR), which trains, using masked images as input, two neural networks that learn the geometry and appearance. Rendered views of the object are styled using 2D neural style transfer (NST) methods, and the style information is used to further train the appearance network to display the given style. With masked deferred back-propagation we are able to optimize the appearance renderer, which is normally trained on only patches of the rendered image to save memory, while using style transfers designed for full-resolution images. |

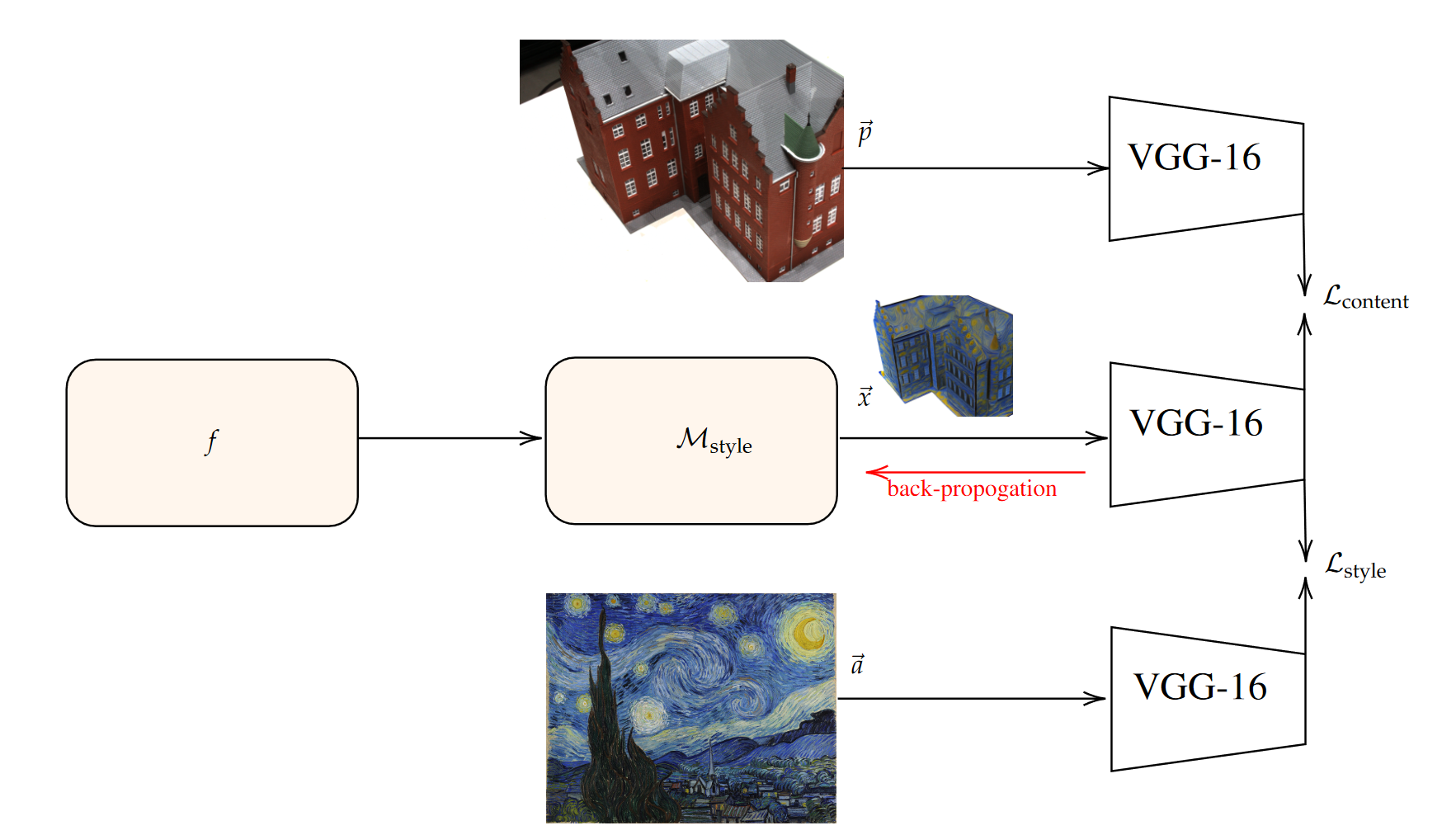

| 3D style transfer is achieved by first training both geometry f and appearance M using IDR. We then train a copy of the appearance renderer Mstyle to further style the appearance based on a given style image. An image of the surface is rendered, and using 2D style transfer approaches the content and style loss are calculated. The calculated gradients are then back-propagated to optimize Mstyle. We adapt from Artistic Radiance Fields deferred back-propagation for our masked input to reduce memory usage. |

|

|

| The expected input for 3D reconstruction is the same as IDR, plus a style image. Example datasets created by IDR can be found here. The dataset of the BK building shown above is included in the repository. It is also possible to create your own dataset, using photoshop to create mask information and COLMAP to calculate the camera parameters. |

|

| We can train the style representation with and without content loss. Having content loss included results in more of the original appearance included, at the cost of styling. We see that for the BK building, the architecture text becomes more visible, but the windows also become less styled. |

|

Acknowledgements |